The AI-Generated Content Detection problem

In an era where artificial intelligence increasingly shapes our digital landscape, distinguishing between human and AI-generated content has become a critical challenge. Google DeepMind has taken a revolutionary step by making its AI text watermark technology, SynthID, open source. This breakthrough development marks a significant milestone in our ability to authenticate and verify AI-generated content, offering new possibilities for responsible AI development and deployment.

Understanding SynthID and AI Text Watermarking

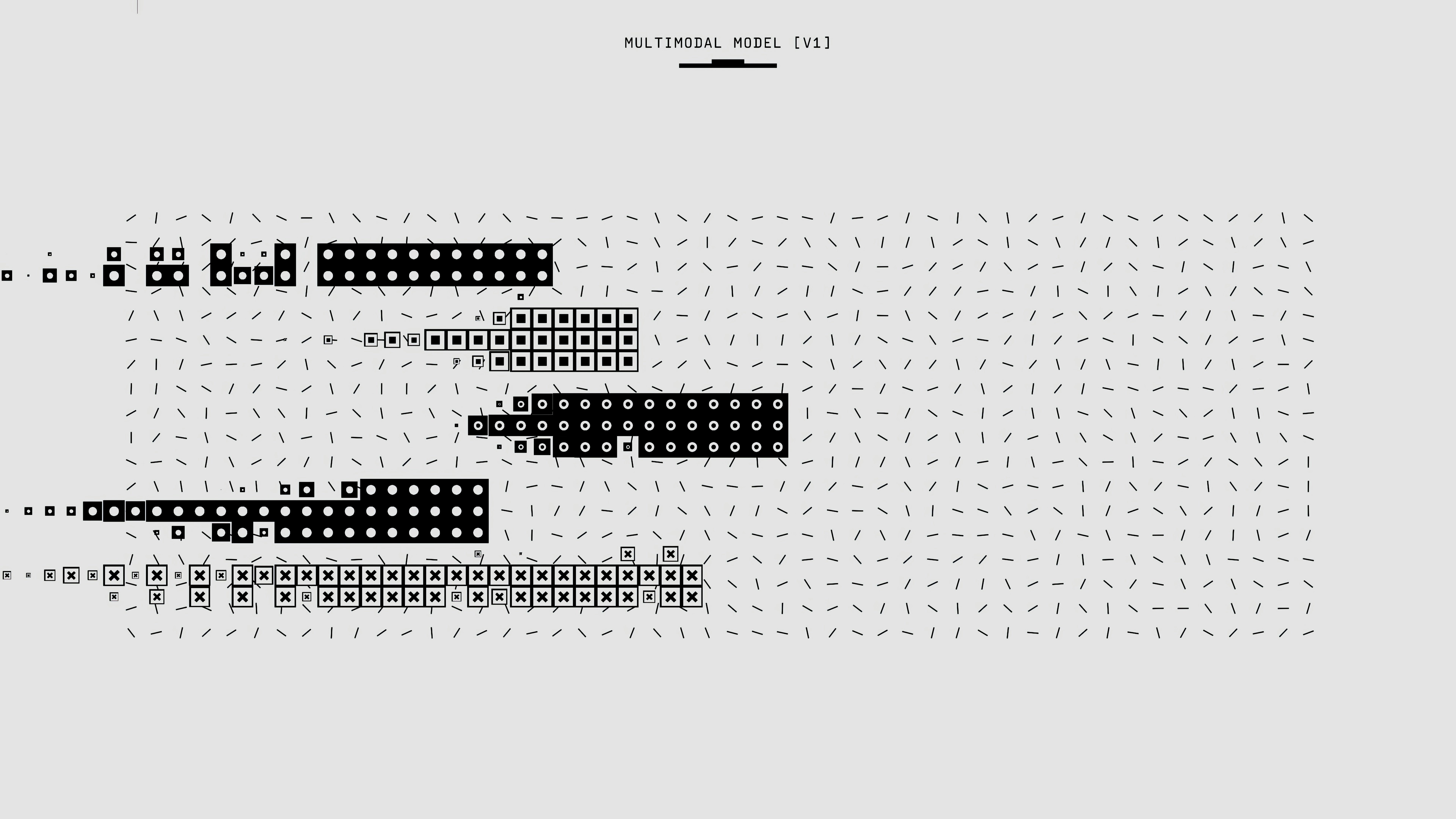

At its core, SynthID represents a sophisticated approach to content authentication in the AI era. The technology works by embedding an invisible watermark directly into text during the generation process, creating a unique digital signature that can be verified later. This innovative system operates at the fundamental level of how large language models (LLMs) process and generate text.

The technical architecture of SynthID leverages the token-based nature of LLMs. These models break down language into smaller units called tokens, which can be individual characters, words, or parts of phrases. Each token receives a probability score indicating its likelihood of appearing next in a sequence. SynthID ingeniously modifies these probability distributions during text generation, creating a subtle but detectable pattern that serves as a watermark.

According to Pushmeet Kohli, Vice President of Research at Google DeepMind, "Now, other [generative] AI developers will be able to use this technology to help them detect whether text outputs have come from their own [large language models], making it easier for more developers to build AI responsibly."

The Development and Testing Process

Google DeepMind's approach to validating SynthID's effectiveness has been remarkably comprehensive. The company conducted one of the largest real-world experiments in AI content authentication history, analyzing approximately 20 million responses through their Gemini platform. This massive-scale testing provided invaluable insights into the technology's performance and user impact.

The testing process focused on two critical aspects:

Detection accuracy and reliability

Impact on content quality and user experience

The results, published in Nature, demonstrated that SynthID successfully maintained content quality while providing reliable detection capabilities. Users showed no significant preference between watermarked and unwatermarked content, indicating that the technology achieves its security goals without compromising the user experience.

Capabilities and Limitations

Key Features

SynthID's capabilities extend beyond simple text watermarking. The system demonstrates impressive resilience against various forms of content manipulation, including:

Partial text extraction

Minor editing and rewording

Format adjustments

Cross-platform sharing

The technology's integration with Google's existing suite of AI tools, including image and video watermarking solutions, creates a comprehensive ecosystem for content authentication.

Current Limitations

Despite its robust features, SynthID faces certain challenges:

Reduced reliability with extensively rewritten content

Limitations in cross-language translation detection

Decreased effectiveness with highly factual content

Technical constraints in certain specialized use cases

As Soheil Feizi, Associate Professor at the University of Maryland, notes, "Achieving reliable and imperceptible watermarking of AI-generated text is fundamentally challenging, especially in scenarios where LLM outputs are near deterministic."

Industry Impact and Expert Perspectives

The decision to make SynthID open source has generated significant excitement within the AI community. João Gante, a machine learning engineer at Hugging Face, emphasizes the importance of this move: "With better accessibility and the ability to confirm its capabilities, I want to believe that watermarking will become the standard, which should help us detect malicious use of language models."

The technology's integration with Hugging Face's platform demonstrates its potential for widespread adoption. This accessibility allows developers to:

Implement watermarking in their own models

Maintain privacy through cryptographic controls

Contribute to industry standardization efforts

Develop custom implementations

Irene Solaiman, Hugging Face's head of global policy, provides a balanced perspective: "Watermarking is one aspect of safer models in an ecosystem that needs many complementing safeguards." This insight highlights the importance of viewing SynthID as part of a broader approach to AI safety and authentication.

Future Implications and Applications

The open-source release of SynthID opens numerous possibilities for future development:

Development Opportunities

Extended language model compatibility

Enhanced detection algorithms

Improved cross-platform integration

Advanced tampering resistance

Broader Impact

Strengthened content verification systems

More effective misinformation prevention

Industry-wide standardization efforts

Enhanced trust in AI-generated content

Conclusion

Google DeepMind's decision to make SynthID open source represents a significant step forward in addressing the challenges of AI content authentication. As the technology continues to evolve and improve through community contributions, its impact on responsible AI development will likely grow. The combination of robust technical capabilities, extensive testing, and community accessibility positions SynthID as a crucial tool in the ongoing effort to build trustworthy AI systems.

For developers and organizations looking to implement AI content authentication, SynthID offers a proven, tested solution that balances security with usability. As the AI landscape continues to evolve, tools like SynthID will play an increasingly important role in maintaining trust and transparency in digital content.